728x90

반응형

공유 디스크 생성

Logical Volume 재 생성

- lvdisplay [volume] 명령을 통해 생성한 logical volume 확인 합니다.

lvdisplay vg1 | grep "LV Path"

lvdisplay vg2 | grep "LV Path"

[root@mysvr:/home/tac]$ lvdisplay vg1 | grep "LV Path"

LV Path /dev/vg1/redo001

LV Path /dev/vg1/redo011

LV Path /dev/vg1/redo021

LV Path /dev/vg1/redo031

LV Path /dev/vg1/redo041

LV Path /dev/vg1/redo051

LV Path /dev/vg1/vol_256m_01

LV Path /dev/vg1/vol_256m_02

LV Path /dev/vg1/vol_256m_03

LV Path /dev/vg1/vol_256m_04

LV Path /dev/vg1/vol_256m_05

LV Path /dev/vg1/vol_256m_06

LV Path /dev/vg1/vol_256m_07

LV Path /dev/vg1/vol_256m_08

LV Path /dev/vg1/vol_512m_01

LV Path /dev/vg1/vol_512m_02

LV Path /dev/vg1/vol_512m_03

LV Path /dev/vg1/cm_01

LV Path /dev/vg1/vol_512m_04

LV Path /dev/vg1/control_01

LV Path /dev/vg1/redo001

LV Path /dev/vg1/redo011

LV Path /dev/vg1/redo021

LV Path /dev/vg1/redo031

LV Path /dev/vg1/redo041

LV Path /dev/vg1/redo051

LV Path /dev/vg1/vol_256m_01

LV Path /dev/vg1/vol_256m_02

LV Path /dev/vg1/vol_256m_03

LV Path /dev/vg1/vol_256m_04

LV Path /dev/vg1/vol_256m_05

LV Path /dev/vg1/vol_256m_06

LV Path /dev/vg1/vol_256m_07

LV Path /dev/vg1/vol_256m_08

LV Path /dev/vg1/vol_512m_01

LV Path /dev/vg1/vol_512m_02

LV Path /dev/vg1/vol_512m_03

LV Path /dev/vg1/cm_01

LV Path /dev/vg1/vol_512m_04

LV Path /dev/vg1/control_01

- lvremove [lv path] 명령을 통해 logical volume 을 삭제 합니다.

lvremove /dev/vg1/redo001 -y

lvremove /dev/vg1/redo011 -y

lvremove /dev/vg1/redo021 -y

lvremove /dev/vg1/redo031 -y

lvremove /dev/vg1/redo041 -y

lvremove /dev/vg1/redo051 -y

lvremove /dev/vg1/vol_256m_01 -y

lvremove /dev/vg1/vol_256m_02 -y

lvremove /dev/vg1/vol_256m_03 -y

lvremove /dev/vg1/vol_256m_04 -y

lvremove /dev/vg1/vol_256m_05 -y

lvremove /dev/vg1/vol_256m_06 -y

lvremove /dev/vg1/vol_256m_07 -y

lvremove /dev/vg1/vol_256m_08 -y

lvremove /dev/vg1/vol_512m_01 -y

lvremove /dev/vg1/vol_512m_02 -y

lvremove /dev/vg1/vol_512m_03 -y

lvremove /dev/vg1/cm_01 -y

lvremove /dev/vg1/vol_512m_04 -y

lvremove /dev/vg1/control_01 -y- lvcreate -L [size] -n [logical volume name] [volume group name] 명령을 통해 logical volume 을 재생성 합니다.

- 각각 volume group 은 5G로 구성 되어 있으므로 1G 짜리 4와 1000m 짜리 1개로 각각 생성 합니다.

lvcreate -L 1G -n vol_1G_01 vg1

lvcreate -L 1G -n vol_1G_02 vg1

lvcreate -L 1G -n vol_1G_03 vg1

lvcreate -L 1G -n vol_1G_04 vg1

lvcreate -L 1000 -n vol_1000m_05 vg1

lvcreate -L 1G -n vol_1G_01 vg2

lvcreate -L 1G -n vol_1G_02 vg2

lvcreate -L 1G -n vol_1G_03 vg2

lvcreate -L 1G -n vol_1G_04 vg2

lvcreate -L 1000 -n vol_1000m_05 vg2

- logical volume 생성 정보 확인

[root@mysvr:/home/tac]$ lvdisplay vg1 |grep "LV Path"

LV Path /dev/vg1/vol_1G_01

LV Path /dev/vg1/vol_1G_02

LV Path /dev/vg1/vol_1G_03

LV Path /dev/vg1/vol_1G_04

LV Path /dev/vg1/vol_1000m_05

[root@mysvr:/home/tac]$ lvdisplay vg2 |grep "LV Path"

LV Path /dev/vg2/vol_1G_01

LV Path /dev/vg2/vol_1G_02

LV Path /dev/vg2/vol_1G_03

LV Path /dev/vg2/vol_1G_04

LV Path /dev/vg2/vol_1000m_05

LV Path /dev/vg1/vol_1G_01

LV Path /dev/vg1/vol_1G_02

LV Path /dev/vg1/vol_1G_03

LV Path /dev/vg1/vol_1G_04

LV Path /dev/vg1/vol_1000m_05

[root@mysvr:/home/tac]$ lvdisplay vg2 |grep "LV Path"

LV Path /dev/vg2/vol_1G_01

LV Path /dev/vg2/vol_1G_02

LV Path /dev/vg2/vol_1G_03

LV Path /dev/vg2/vol_1G_04

LV Path /dev/vg2/vol_1000m_05

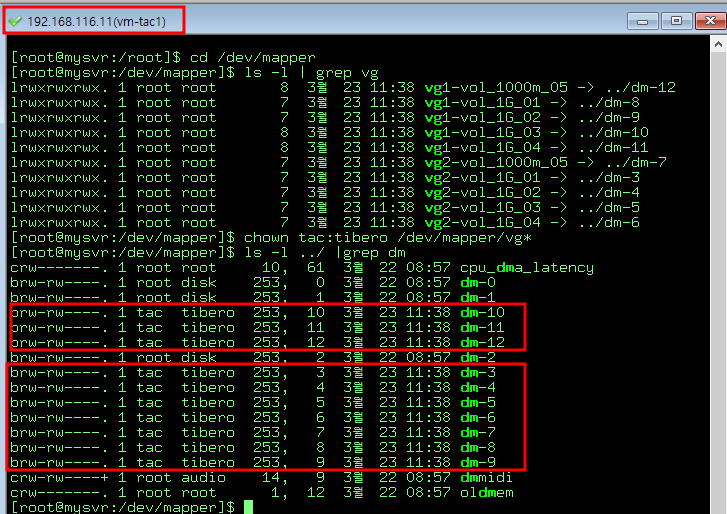

- 각각 노드별(TAC1,TAC2) volum 권한 설정 및 정보 확인

cd /dev/mapper

ls -l | grep vg

chown tac:tibero /dev/mapper/vg*

ls -l ../ |grep dm| tac1( server node1) -192.168.116.11 | tac2( server node2) -192.168.116.12 |

|

|

TAS TAC 설치

Tibero profile 및 파일 설정 (환경설정)

| tac1( server node1) -192.168.116.11 | tac2( server node2) -192.168.116.12 | |

| tac1.profile vs tac2.profile |

export TB_HOME=/home/tac/tibero7 export CM_HOME=/home/tac/tibero7 export TB_SID=tac1 export CM_SID=cm1 export PATH=.:$TB_HOME/bin:$TB_HOME/client/bin:$CM_HOME/scripts:$PATH export LD_LIBRARY_PATH=.:$TB_HOME/lib:$TB_HOME/client/lib:/lib:/usr/lib:/usr/local/lib:/usr/lib/threads export LD_LIBRARY_PATH_64=$TB_HOME/lib:$TB_HOME/client/lib:/usr/lib64:/usr/lib/64:/usr/ucblib:/usr/local/lib export LIBPATH=$LD_LIBRARY_PATH export LANG=ko_KR.eucKR export LC_ALL=ko_KR.eucKR export LC_CTYPE=ko_KR.eucKR export LC_NUMERIC=ko_KR.eucKR export LC_TIME=ko_KR.eucKR export LC_COLLATE=ko_KR.eucKR export LC_MONETARY=ko_KR.eucKR export LC_MESSAGES=ko_KR.eucKR |

export TB_HOME=/home/tac/tibero7 export CM_HOME=/home/tac/tibero7 export TB_SID=tac2 export CM_SID=cm2 export PATH=.:$TB_HOME/bin:$TB_HOME/client/bin:$CM_HOME/scripts:$PATH export LD_LIBRARY_PATH=.:$TB_HOME/lib:$TB_HOME/client/lib:/lib:/usr/lib:/usr/local/lib:/usr/lib/threads export LD_LIBRARY_PATH_64=$TB_HOME/lib:$TB_HOME/client/lib:/usr/lib64:/usr/lib/64:/usr/ucblib:/usr/local/lib export LIBPATH=$LD_LIBRARY_PATH export LANG=ko_KR.eucKR export LC_ALL=ko_KR.eucKR export LC_CTYPE=ko_KR.eucKR export LC_NUMERIC=ko_KR.eucKR export LC_TIME=ko_KR.eucKR export LC_COLLATE=ko_KR.eucKR export LC_MONETARY=ko_KR.eucKR export LC_MESSAGES=ko_KR.eucKR |

| cm1.tip vs cm2.tip |

#cluster 구성 시, node의 ID로 사용될 값 CM_NAME=cm1 #cmctl 명령 사용 시, cm에 접속하기 위해 필요한 포트 정보 CM_UI_PORT=61040 CM_HEARTBEAT_EXPIRE=450 CM_WATCHDOG_EXPIRE=400 LOG_LVL_CM=5 CM_RESOURCE_FILE=/home/tac/cm_resource/cmfile CM_RESOURCE_FILE_BACKUP=/home/tac/cm_resource/cmfile_backup CM_RESOURCE_FILE_BACKUP_INTERVAL=1 CM_LOG_DEST=/home/tac/tibero7/instance/tac1/log/cm CM_GUARD_LOG_DEST=/home/tac/tibero7/instance/tac1/log/cm_guard CM_FENCE=Y CM_ENABLE_FAST_NET_ERROR_DETECTION=Y _CM_CHECK_RUNLEVEL=Y |

#cluster 구성 시, node의 ID로 사용될 값 CM_NAME=cm2 #cmctl 명령 사용 시, cm에 접속하기 위해 필요한 포트 정보 CM_UI_PORT=61040 CM_HEARTBEAT_EXPIRE=450 CM_WATCHDOG_EXPIRE=400 LOG_LVL_CM=5 CM_RESOURCE_FILE=/home/tac/cm_resource/cmfile CM_RESOURCE_FILE_BACKUP=/home/tac/cm_resource/cmfile_backup CM_RESOURCE_FILE_BACKUP_INTERVAL=1 CM_LOG_DEST=/home/tac/tibero7/instance/tac2/log/cm CM_GUARD_LOG_DEST=/home/tac/tibero7/instance/tac2/log/cm_guard CM_FENCE=Y CM_ENABLE_FAST_NET_ERROR_DETECTION=Y _CM_CHECK_RUNLEVEL=Y |

| tas1.tip vs tas2.tip |

DB_NAME=tas LISTENER_PORT=3000 MAX_SESSION_COUNT=10 TOTAL_SHM_SIZE=1G MEMORY_TARGET=2G BOOT_WITH_AUTO_DOWN_CLEAN=Y THREAD=0 CLUSTER_DATABASE=Y ###TBCM### CM_PORT=61040 LOCAL_CLUSTER_ADDR=10.10.10.10 LOCAL_CLUSTER_PORT=61060 ###TAS### INSTANCE_TYPE=AS AS_DISKSTRING="/dev/mapper/vg*-vol*" |

DB_NAME=tas LISTENER_PORT=3000 MAX_SESSION_COUNT=10 TOTAL_SHM_SIZE=1G MEMORY_TARGET=2G BOOT_WITH_AUTO_DOWN_CLEAN=Y THREAD=1 CLUSTER_DATABASE=Y ###TBCM### CM_PORT=61040 LOCAL_CLUSTER_ADDR=10.10.10.20 LOCAL_CLUSTER_PORT=61060 ###TAS### INSTANCE_TYPE=AS AS_DISKSTRING="/dev/mapper/vg*-vol*" |

| tac1.tip vs tac2.tip |

DB_NAME=tibero LISTENER_PORT=21000 #CONTROL_FILES="/dev/raw/raw2" #DB_CREATE_FILE_DEST=/home/tac/tbdata/ #LOG_ARCHIVE_DEST=/home/tac/tbdata/archive CONTROL_FILES="+DS0/c1.ctl" DB_CREATE_FILE_DEST="+DS0" LOG_ARCHIVE_DEST="+DS0/archive" DBWR_CNT=1 DBMS_LOG_TOTAL_SIZE_LIMIT=300M MAX_SESSION_COUNT=30 TOTAL_SHM_SIZE=1G MEMORY_TARGET=2G DB_BLOCK_SIZE=8K DB_CACHE_SIZE=300M CLUSTER_DATABASE=Y THREAD=0 UNDO_TABLESPACE=UNDO0 ########### NEW_CM ####################### CM_PORT=61040 #_CM_LOCAL_ADDR=192.168.116.11 LOCAL_CLUSTER_ADDR=10.10.10.10 LOCAL_CLUSTER_PORT=61050 ########################################### ############# TAS ####################### USE_ACTIVE_STORAGE=Y AS_PORT=3000 ############################################ |

DB_NAME=tibero LISTENER_PORT=21000 #CONTROL_FILES="/dev/raw/raw2" #DB_CREATE_FILE_DEST=/home/tac/tbdata/ #LOG_ARCHIVE_DEST=/home/tac/tbdata/archive CONTROL_FILES="+DS0/c1.ctl" DB_CREATE_FILE_DEST="+DS0" LOG_ARCHIVE_DEST="+DS0/archive" DBWR_CNT=1 DBMS_LOG_TOTAL_SIZE_LIMIT=300M #TRACE_LOG_TOTAL_SIZE_LIMIT=30G MAX_SESSION_COUNT=30 TOTAL_SHM_SIZE=1G MEMORY_TARGET=2G DB_BLOCK_SIZE=8K DB_CACHE_SIZE=300M CLUSTER_DATABASE=Y THREAD=1 UNDO_TABLESPACE=UNDO1 ########### NEW_CM ####################### CM_PORT=61040 #_CM_LOCAL_ADDR=192.168.116.12 LOCAL_CLUSTER_ADDR=10.10.10.20 LOCAL_CLUSTER_PORT=61050 ########################################### ############# TAS ####################### USE_ACTIVE_STORAGE=Y AS_PORT=3000 ########################################### |

| tbdsn.tbr | tibero=( (INSTANCE=(HOST=192.168.116.11) (PORT=21000) (DB_NAME=tibero) ) (INSTANCE=(HOST=192.168.116.12) (PORT=21000) (DB_NAME=tibero) ) (LOAD_BALANCE=Y) (USE_FAILOVER=Y) ) tas1=( (INSTANCE=(HOST=192.168.116.11) (PORT=3000) (DB_NAME=tas) ) ) tas2=( (INSTANCE=(HOST=192.168.116.12) (PORT=3000) (DB_NAME=tas) ) ) tac1=( (INSTANCE=(HOST=192.168.116.11) (PORT=21000) (DB_NAME=tibero) ) ) tac2=( (INSTANCE=(HOST=192.168.116.12) (PORT=21000) (DB_NAME=tibero) ) ) |

tibero=( (INSTANCE=(HOST=192.168.116.11) (PORT=21000) (DB_NAME=tibero) ) (INSTANCE=(HOST=192.168.116.12) (PORT=21000) (DB_NAME=tibero) ) (LOAD_BALANCE=Y) (USE_FAILOVER=Y) ) tas1=( (INSTANCE=(HOST=192.168.116.11) (PORT=3000) (DB_NAME=tas) ) ) tas2=( (INSTANCE=(HOST=192.168.116.12) (PORT=3000) (DB_NAME=tas) ) ) tac1=( (INSTANCE=(HOST=192.168.116.11) (PORT=21000) (DB_NAME=tibero) ) ) tac2=( (INSTANCE=(HOST=192.168.116.12) (PORT=21000) (DB_NAME=tibero) ) ) |

TAC 설치

각 서버별 TAC 설정 파일 복사

- tac 설정 관련 파일을 tibero7 각 디렉토리로 Copy 합니다.

#TAC1 번 노드

cp tas1.tip $TB_HOME/config/tas1.tip

cp tac1.tip $TB_HOME/config/tac1.tip

cp cm1.tip $TB_HOME/config/cm1.tip

cp tbdsn.tbr $TB_HOME/client/config/tbdsn.tbr

cp license.xml $TB_HOME/license/license.xml

#TAC2 번 노드

cp tas2.tip $TB_HOME/config/tas2.tip

cp tac2.tip $TB_HOME/config/tac2.tip

cp cm2.tip $TB_HOME/config/cm2.tip

cp tbdsn.tbr $TB_HOME/client/config/tbdsn.tbr

cp license.xml $TB_HOME/license/license.xml

TAC1 번 노드 작업 수행

- cm booting

tbcm -b

TB_SID=tas1 tbboot nomount[tac@mysvr:/home/tac]$ . tac.profile

[tac@mysvr:/home/tac]$ tbcm -b

######################### WARNING #########################

# You are trying to start the CM-fence function. #

###########################################################

You are not 'root'. Proceed anyway without fence? (y/N)y

CM Guard daemon started up.

CM-fence enabled.

TBCM 7.1.1 (Build 258584)

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero cluster manager started up.

Local node name is (cm1:61040).

[tac@mysvr:/home/tac]$ TB_SID=tas1 tbboot nomount

Listener port = 3000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NOMOUNT mode).

[tac@mysvr:/home/tac]$ tbcm -b

######################### WARNING #########################

# You are trying to start the CM-fence function. #

###########################################################

You are not 'root'. Proceed anyway without fence? (y/N)y

CM Guard daemon started up.

CM-fence enabled.

TBCM 7.1.1 (Build 258584)

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero cluster manager started up.

Local node name is (cm1:61040).

[tac@mysvr:/home/tac]$ TB_SID=tas1 tbboot nomount

Listener port = 3000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NOMOUNT mode).

- tas diskspace create

- tbsql 로 tas1 접속후 diskspace 를 생성 한다.

CREATE DISKSPACE ds0 FORCE EXTERNAL REDUNDANCY

FAILGROUP FG1 DISK

'/dev/mapper/vg1-vol_1G_01' NAME FG_DISK1,

'/dev/mapper/vg1-vol_1G_02' NAME FG_DISK2,

'/dev/mapper/vg1-vol_1G_03' NAME FG_DISK3,

'/dev/mapper/vg1-vol_1G_04' NAME FG_DISK4,

'/dev/mapper/vg2-vol_1G_01' NAME FG_DISK5,

'/dev/mapper/vg2-vol_1G_02' NAME FG_DISK6,

'/dev/mapper/vg2-vol_1G_03' NAME FG_DISK7,

'/dev/mapper/vg2-vol_1G_04' NAME FG_DISK8

/[tac@mysvr:/home/tac]$ TB_SID=tas1 tbboot nomount

Listener port = 3000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NOMOUNT mode).

[tac@mysvr:/home/tac]$ tbsql sys/tibero@tas1

tbSQL 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Connected to Tibero using tas1.

SQL> CREATE DISKSPACE ds0 FORCE EXTERNAL REDUNDANCY

2 FAILGROUP FG1 DISK

3 '/dev/mapper/vg1-vol_1G_01' NAME FG_DISK1,

4 '/dev/mapper/vg1-vol_1G_02' NAME FG_DISK2,

5 '/dev/mapper/vg1-vol_1G_03' NAME FG_DISK3,

6 '/dev/mapper/vg1-vol_1G_04' NAME FG_DISK4,

7 '/dev/mapper/vg2-vol_1G_01' NAME FG_DISK5,

8 '/dev/mapper/vg2-vol_1G_02' NAME FG_DISK6,

9 '/dev/mapper/vg2-vol_1G_03' NAME FG_DISK7,

10 '/dev/mapper/vg2-vol_1G_04' NAME FG_DISK8

11 /

Diskspace 'DS0' created.

SQL> q

Disconnected.

Listener port = 3000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NOMOUNT mode).

[tac@mysvr:/home/tac]$ tbsql sys/tibero@tas1

tbSQL 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Connected to Tibero using tas1.

SQL> CREATE DISKSPACE ds0 FORCE EXTERNAL REDUNDANCY

2 FAILGROUP FG1 DISK

3 '/dev/mapper/vg1-vol_1G_01' NAME FG_DISK1,

4 '/dev/mapper/vg1-vol_1G_02' NAME FG_DISK2,

5 '/dev/mapper/vg1-vol_1G_03' NAME FG_DISK3,

6 '/dev/mapper/vg1-vol_1G_04' NAME FG_DISK4,

7 '/dev/mapper/vg2-vol_1G_01' NAME FG_DISK5,

8 '/dev/mapper/vg2-vol_1G_02' NAME FG_DISK6,

9 '/dev/mapper/vg2-vol_1G_03' NAME FG_DISK7,

10 '/dev/mapper/vg2-vol_1G_04' NAME FG_DISK8

11 /

Diskspace 'DS0' created.

SQL> q

Disconnected.

TAC1 번 노드 CM (Tibero Cluster Manager) 맵버십 등록

cmrctl add network --nettype private --ipaddr 10.10.10.10 --portno 51210 --name net1

cmrctl add network --nettype public --ifname ens33 --name pub1

cmrctl add cluster --incnet net1 --pubnet pub1 --cfile "+/dev/mapper/vg1-vol_1G_01,/dev/mapper/vg1-vol_1G_02,/dev/mapper/vg1-vol_1G_03,/dev/mapper/vg1-vol_1G_04,/dev/mapper/vg2-vol_1G_01,/dev/mapper/vg2-vol_1G_02,/dev/mapper/vg2-vol_1G_03,/dev/mapper/vg2-vol_1G_04" --name cls1

cmrctl start cluster --name cls1

cmrctl add service --name tas --type as --cname cls1

cmrctl add as --name tas1 --svcname tas --dbhome $CM_HOME

cmrctl add service --name tibero --cname cls1

cmrctl add db --name tac1 --svcname tibero --dbhome $CM_HOME

cmrctl start as --name tas1

cmrctl show[tac@mysvr:/home/tac]$ cmrctl start as --name tas1

Listener port = 3000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NORMAL mode).

BOOT SUCCESS! (MODE : NORMAL)

[tac@mysvr:/home/tac]$ cmrctl show

Resource List of Node cm1

=====================================================================

CLUSTER TYPE NAME STATUS DETAIL

----------- -------- -------------- -------- ------------------------

COMMON network net1 UP (private) 10.10.10.10/51210

COMMON network pub1 UP (public) ens33

COMMON cluster cls1 UP inc: net1, pub: pub1

cls1 file cls1:0 UP +0

cls1 file cls1:1 UP +1

cls1 file cls1:2 UP +2

cls1 service tas UP Active Storage, Active Cluster (auto-restart: OFF)

cls1 service tibero DOWN Database, Active Cluster (auto-restart: OFF)

cls1 as tas1 UP(NRML) tas, /home/tac/tibero7, failed retry cnt: 0

cls1 db tac1 DOWN tibero, /home/tac/tibero7, failed retry cnt: 0

=====================================================================

[tac@mysvr:/home/tac]$

Listener port = 3000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NORMAL mode).

BOOT SUCCESS! (MODE : NORMAL)

[tac@mysvr:/home/tac]$ cmrctl show

Resource List of Node cm1

=====================================================================

CLUSTER TYPE NAME STATUS DETAIL

----------- -------- -------------- -------- ------------------------

COMMON network net1 UP (private) 10.10.10.10/51210

COMMON network pub1 UP (public) ens33

COMMON cluster cls1 UP inc: net1, pub: pub1

cls1 file cls1:0 UP +0

cls1 file cls1:1 UP +1

cls1 file cls1:2 UP +2

cls1 service tas UP Active Storage, Active Cluster (auto-restart: OFF)

cls1 service tibero DOWN Database, Active Cluster (auto-restart: OFF)

cls1 as tas1 UP(NRML) tas, /home/tac/tibero7, failed retry cnt: 0

cls1 db tac1 DOWN tibero, /home/tac/tibero7, failed retry cnt: 0

=====================================================================

[tac@mysvr:/home/tac]$

tas diskspace 활성화

tbsql sys/tibero@tas1

ALTER DISKSPACE ds0 ADD THREAD 1;database create

cmrctl start db --name tac1 --option "-t nomount"

tbsql sys/tibero@tac1

CREATE DATABASE "tibero"

USER sys IDENTIFIED BY tibero

MAXINSTANCES 8

MAXDATAFILES 256

CHARACTER set MSWIN949

NATIONAL character set UTF16

LOGFILE GROUP 0 '+DS0/redo001.redo' SIZE 100M,

GROUP 1 '+DS0/redo011.redo' SIZE 100M,

GROUP 2 '+DS0/redo021.redo' SIZE 100M

MAXLOGFILES 100

MAXLOGMEMBERS 8

ARCHIVELOG

DATAFILE '+DS0/system001.dtf' SIZE 128M

AUTOEXTEND ON NEXT 8M MAXSIZE UNLIMITED

DEFAULT TABLESPACE USR

DATAFILE '+DS0/usr001.dtf' SIZE 128M

AUTOEXTEND ON NEXT 16M MAXSIZE UNLIMITED

EXTENT MANAGEMENT LOCAL AUTOALLOCATE

DEFAULT TEMPORARY TABLESPACE TEMP

TEMPFILE '+DS0/temp001.dtf' SIZE 128M

AUTOEXTEND ON NEXT 8M MAXSIZE UNLIMITED

EXTENT MANAGEMENT LOCAL AUTOALLOCATE

UNDO TABLESPACE UNDO0

DATAFILE '+DS0/undo001.dtf' SIZE 128M

AUTOEXTEND ON NEXT 128M MAXSIZE UNLIMITED

EXTENT MANAGEMENT LOCAL AUTOALLOCATE;[tac@mysvr:/home/tac]$ cmrctl start db --name tac1 --option "-t nomount"

Listener port = 21000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NOMOUNT mode).

BOOT SUCCESS! (MODE : NOMOUNT)

[tac@mysvr:/home/tac]$ tbsql sys/tibero@tac1

tbSQL 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Connected to Tibero using tac1.

SQL> CREATE DATABASE "tibero"

2 USER sys IDENTIFIED BY tibero

3 MAXINSTANCES 8

4 MAXDATAFILES 256

5 CHARACTER set MSWIN949

6 NATIONAL character set UTF16

7 LOGFILE GROUP 0 '+DS0/redo001.redo' SIZE 100M,

8 GROUP 1 '+DS0/redo011.redo' SIZE 100M,

9 GROUP 2 '+DS0/redo021.redo' SIZE 100M

10 MAXLOGFILES 100

MAXLOGMEMBERS 8

11 12 ARCHIVELOG

13 DATAFILE '+DS0/system001.dtf' SIZE 128M

14 AUTOEXTEND ON NEXT 8M MAXSIZE UNLIMITED

15 DEFAULT TABLESPACE USR

16 DATAFILE '+DS0/usr001.dtf' SIZE 128M

17 AUTOEXTEND ON NEXT 16M MAXSIZE UNLIMITED

18 EXTENT MANAGEMENT LOCAL AUTOALLOCATE

19 DEFAULT TEMPORARY TABLESPACE TEMP

20 TEMPFILE '+DS0/temp001.dtf' SIZE 128M

21 AUTOEXTEND ON NEXT 8M MAXSIZE UNLIMITED

22 EXTENT MANAGEMENT LOCAL AUTOALLOCATE

23 UNDO TABLESPACE UNDO0

24 DATAFILE '+DS0/undo001.dtf' SIZE 128M

25 AUTOEXTEND ON NEXT 128M MAXSIZE UNLIMITED

26 EXTENT MANAGEMENT LOCAL AUTOALLOCATE;

Database created.

SQL> q

Disconnected.

Listener port = 21000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NOMOUNT mode).

BOOT SUCCESS! (MODE : NOMOUNT)

[tac@mysvr:/home/tac]$ tbsql sys/tibero@tac1

tbSQL 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Connected to Tibero using tac1.

SQL> CREATE DATABASE "tibero"

2 USER sys IDENTIFIED BY tibero

3 MAXINSTANCES 8

4 MAXDATAFILES 256

5 CHARACTER set MSWIN949

6 NATIONAL character set UTF16

7 LOGFILE GROUP 0 '+DS0/redo001.redo' SIZE 100M,

8 GROUP 1 '+DS0/redo011.redo' SIZE 100M,

9 GROUP 2 '+DS0/redo021.redo' SIZE 100M

10 MAXLOGFILES 100

MAXLOGMEMBERS 8

11 12 ARCHIVELOG

13 DATAFILE '+DS0/system001.dtf' SIZE 128M

14 AUTOEXTEND ON NEXT 8M MAXSIZE UNLIMITED

15 DEFAULT TABLESPACE USR

16 DATAFILE '+DS0/usr001.dtf' SIZE 128M

17 AUTOEXTEND ON NEXT 16M MAXSIZE UNLIMITED

18 EXTENT MANAGEMENT LOCAL AUTOALLOCATE

19 DEFAULT TEMPORARY TABLESPACE TEMP

20 TEMPFILE '+DS0/temp001.dtf' SIZE 128M

21 AUTOEXTEND ON NEXT 8M MAXSIZE UNLIMITED

22 EXTENT MANAGEMENT LOCAL AUTOALLOCATE

23 UNDO TABLESPACE UNDO0

24 DATAFILE '+DS0/undo001.dtf' SIZE 128M

25 AUTOEXTEND ON NEXT 128M MAXSIZE UNLIMITED

26 EXTENT MANAGEMENT LOCAL AUTOALLOCATE;

Database created.

SQL> q

Disconnected.

tac 2번 노드 UNDO,REDO 등 생성

cmrctl start db --name tac1

tbsql sys/tibero@tac1

CREATE UNDO TABLESPACE UNDO1 DATAFILE '+DS0/undo011.dtf' SIZE 128M AUTOEXTEND ON NEXT 8M MAXSIZE UNLIMITED EXTENT MANAGEMENT LOCAL AUTOALLOCATE;

create tablespace syssub datafile '+DS0/syssub001.dtf' SIZE 128M autoextend on next 8M EXTENT MANAGEMENT LOCAL AUTOALLOCATE;

ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 3 '+DS0/redo031.redo' size 100M;

ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 4 '+DS0/redo041.redo' size 100M;

ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 5 '+DS0/redo051.redo' size 100M;

ALTER DATABASE ENABLE PUBLIC THREAD 1;[tac@mysvr:/home/tac]$ cmrctl start db --name tac1

Listener port = 21000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NORMAL mode).

BOOT SUCCESS! (MODE : NORMAL)

[tac@mysvr:/home/tac]$ tbsql sys/tibero@tac1

tbSQL 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Connected to Tibero using tac1.

SQL> CREATE UNDO TABLESPACE UNDO1 DATAFILE '+DS0/undo011.dtf' SIZE 128M AUTOEXTEND ON NEXT 8M MAXSIZE UNLIMITED EXTENT MANAGEMENT LOCAL AUTOALLOCATE;

Tablespace 'UNDO1' created.

SQL> create tablespace syssub datafile '+DS0/syssub001.dtf' SIZE 128M autoextend on next 8M EXTENT MANAGEMENT LOCAL AUTOALLOCATE;

Tablespace 'SYSSUB' created.

SQL> ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 3 '+DS0/redo031.redo' size 100M;

Database altered.

SQL> ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 4 '+DS0/redo041.redo' size 100M;

Database altered.

SQL> ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 5 '+DS0/redo051.redo' size 100M;

Database altered.

SQL> ALTER DATABASE ENABLE PUBLIC THREAD 1;

Database altered.

Listener port = 21000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NORMAL mode).

BOOT SUCCESS! (MODE : NORMAL)

[tac@mysvr:/home/tac]$ tbsql sys/tibero@tac1

tbSQL 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Connected to Tibero using tac1.

SQL> CREATE UNDO TABLESPACE UNDO1 DATAFILE '+DS0/undo011.dtf' SIZE 128M AUTOEXTEND ON NEXT 8M MAXSIZE UNLIMITED EXTENT MANAGEMENT LOCAL AUTOALLOCATE;

Tablespace 'UNDO1' created.

SQL> create tablespace syssub datafile '+DS0/syssub001.dtf' SIZE 128M autoextend on next 8M EXTENT MANAGEMENT LOCAL AUTOALLOCATE;

Tablespace 'SYSSUB' created.

SQL> ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 3 '+DS0/redo031.redo' size 100M;

Database altered.

SQL> ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 4 '+DS0/redo041.redo' size 100M;

Database altered.

SQL> ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 5 '+DS0/redo051.redo' size 100M;

Database altered.

SQL> ALTER DATABASE ENABLE PUBLIC THREAD 1;

Database altered.

system.sh 쉘 수행

export TB_SID=tac1

$TB_HOME/scripts/system.sh -p1 tibero -p2 syscat -a1 y -a2 y -a3 y -a4 y[tac@mysvr:/home/tac]$ export TB_SID=tac1

[tac@mysvr:/home/tac]$ $TB_HOME/scripts/system.sh -p1 tibero -p2 syscat -a1 y -a2 y -a3 y -a4 y

Creating additional system index...

Dropping agent table...

Creating client policy table ...

Creating text packages table ...

Creating the role DBA...

Creating system users & roles...

.........................

.................중략

Start TPR

Create tudi interface

Running /home/tac/tibero7/scripts/odci.sql...

Creating spatial meta tables and views ...

Registering default spatial reference systems ...

Registering unit of measure entries...

Creating internal system jobs...

Creating Japanese Lexer epa source ...

Creating internal system notice queue ...

Creating sql translator profiles ...

Creating agent table...

Creating additional static views using dpv...

Done.

For details, check /home/tac/tibero7/instance/tac1/log/system_init.log.

[tac@mysvr:/home/tac]$ $TB_HOME/scripts/system.sh -p1 tibero -p2 syscat -a1 y -a2 y -a3 y -a4 y

Creating additional system index...

Dropping agent table...

Creating client policy table ...

Creating text packages table ...

Creating the role DBA...

Creating system users & roles...

.........................

.................중략

Start TPR

Create tudi interface

Running /home/tac/tibero7/scripts/odci.sql...

Creating spatial meta tables and views ...

Registering default spatial reference systems ...

Registering unit of measure entries...

Creating internal system jobs...

Creating Japanese Lexer epa source ...

Creating internal system notice queue ...

Creating sql translator profiles ...

Creating agent table...

Creating additional static views using dpv...

Done.

For details, check /home/tac/tibero7/instance/tac1/log/system_init.log.

TAC2 번 노드 작업 수행

TAC2 번 노드 CM (Tibero Cluster Manager) 맵버십 등록

tbcm -b

cmrctl add network --nettype private --ipaddr 10.10.10.20 --portno 51210 --name net2

cmrctl add network --nettype public --ifname ens33 --name pub2

cmrctl add cluster --incnet net2 --pubnet pub2 --cfile "+/dev/mapper/vg1-vol_1G_01,/dev/mapper/vg1-vol_1G_02,/dev/mapper/vg1-vol_1G_03,/dev/mapper/vg1-vol_1G_04,/dev/mapper/vg2-vol_1G_01,/dev/mapper/vg2-vol_1G_02,/dev/mapper/vg2-vol_1G_03,/dev/mapper/vg2-vol_1G_04" --name cls1

cmrctl start cluster --name cls1

#cmrctl add service --name tas --type as --cname cls1

cmrctl add as --name tas2 --svcname tas --dbhome $CM_HOME

#cmrctl add service --name tibero --cname cls1

cmrctl add db --name tac2 --svcname tibero --dbhome $CM_HOME[tac@mysvr:/home/tac]$ tbcm -b

CM Guard daemon started up.

CM-fence enabled.

TBCM 7.1.1 (Build 258584)

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero cluster manager started up.

Local node name is (cm2:61040).

[tac@mysvr:/home/tac]$ cmrctl add network --nettype private --ipaddr 10.10.10.20 --portno 51210 --name net2

Resource add success! (network, net2)

[tac@mysvr:/home/tac]$ cmrctl add network --nettype public --ifname ens33 --name pub2

Resource add success! (network, pub2)

[tac@mysvr:/home/tac]$ cmrctl add cluster --incnet net2 --pubnet pub2 --cfile "+/dev/mapper/vg1-vol_1G_01,/dev/mapper/vg1-vol_1G_02,/dev/mapper/vg1-vol_1G_03,/

dev/mapper/vg1-vol_1G_04,/dev/mapper/vg2-vol_1G_01,/dev/mapper/vg2-vol_1G_02,/dev/mapper/vg2-vol_1G_03,/dev/mapper/vg2-vol_1G_04" --name cls1

Resource add success! (cluster, cls1)

[tac@mysvr:/home/tac]$ cmrctl start cluster --name cls1

MSG SENDING SUCCESS!

[tac@mysvr:/home/tac]$ cmrctl add as --name tas2 --svcname tas --dbhome $CM_HOME

ADD AS RESOURCE WITH ROOT PERMISSION!

Continue? (y/N) y

Resource add success! (as, tas2)

[tac@mysvr:/home/tac]$ cmrctl add db --name tac2 --svcname tibero --dbhome $CM_HOME

ADD DB RESOURCE WITH ROOT PERMISSION!

Continue? (y/N) y

Resource add success! (db, tac2)

CM Guard daemon started up.

CM-fence enabled.

TBCM 7.1.1 (Build 258584)

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero cluster manager started up.

Local node name is (cm2:61040).

[tac@mysvr:/home/tac]$ cmrctl add network --nettype private --ipaddr 10.10.10.20 --portno 51210 --name net2

Resource add success! (network, net2)

[tac@mysvr:/home/tac]$ cmrctl add network --nettype public --ifname ens33 --name pub2

Resource add success! (network, pub2)

[tac@mysvr:/home/tac]$ cmrctl add cluster --incnet net2 --pubnet pub2 --cfile "+/dev/mapper/vg1-vol_1G_01,/dev/mapper/vg1-vol_1G_02,/dev/mapper/vg1-vol_1G_03,/

dev/mapper/vg1-vol_1G_04,/dev/mapper/vg2-vol_1G_01,/dev/mapper/vg2-vol_1G_02,/dev/mapper/vg2-vol_1G_03,/dev/mapper/vg2-vol_1G_04" --name cls1

Resource add success! (cluster, cls1)

[tac@mysvr:/home/tac]$ cmrctl start cluster --name cls1

MSG SENDING SUCCESS!

[tac@mysvr:/home/tac]$ cmrctl add as --name tas2 --svcname tas --dbhome $CM_HOME

ADD AS RESOURCE WITH ROOT PERMISSION!

Continue? (y/N) y

Resource add success! (as, tas2)

[tac@mysvr:/home/tac]$ cmrctl add db --name tac2 --svcname tibero --dbhome $CM_HOME

ADD DB RESOURCE WITH ROOT PERMISSION!

Continue? (y/N) y

Resource add success! (db, tac2)

TAS2 ,TAC2 부팅

cmrctl start as --name tas2

cmrctl start db --name tac2[tac@mysvr:/home/tac]$ cmrctl start as --name tas2

Listener port = 3000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NORMAL mode).

BOOT SUCCESS! (MODE : NORMAL)

[tac@mysvr:/home/tac]$ cmrctl start db --name tac2

Listener port = 21000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NORMAL mode).

BOOT SUCCESS! (MODE : NORMAL)

[tac@mysvr:/home/tac]$ tbsql sys/tibero@tac2

Listener port = 3000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NORMAL mode).

BOOT SUCCESS! (MODE : NORMAL)

[tac@mysvr:/home/tac]$ cmrctl start db --name tac2

Listener port = 21000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NORMAL mode).

BOOT SUCCESS! (MODE : NORMAL)

[tac@mysvr:/home/tac]$ tbsql sys/tibero@tac2

TAS2 번 노드 접속 및 확인

[tac@mysvr:/home/tac]$ tbsql sys/tibero@tac2

tbSQL 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Connected to Tibero using tac2.

SQL> ls

NAME SUBNAME TYPE

---------------------------------- ------------------------ --------------------

SPH_REPORT_DIR DIRECTORY

TPR_REPORT_DIR DIRECTORY

TPR_TIP_DIR DIRECTORY

NULL_VERIFY_FUNCTION FUNCTION

TB_COMPLEXITY_CHECK FUNCTION

TB_STRING_DISTANCE FUNCTION

SQL> select * from v$instance;

INSTANCE_NUMBER INSTANCE_NAME

--------------- ----------------------------------------

DB_NAME

----------------------------------------

HOST_NAME PARALLEL

--------------------------------------------------------------- --------

THREAD# VERSION

---------- --------

STARTUP_TIME

--------------------------------------------------------------------------------

STATUS SHUTDOWN_PENDING

---------------- ----------------

TIP_FILE

--------------------------------------------------------------------------------

1 tac2

tibero

mysvr YES

1 7

2023/03/14

NORMAL NO

/home/tac/tibero7/config/tac2.tip

1 row selected.

tbSQL 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Connected to Tibero using tac2.

SQL> ls

NAME SUBNAME TYPE

---------------------------------- ------------------------ --------------------

SPH_REPORT_DIR DIRECTORY

TPR_REPORT_DIR DIRECTORY

TPR_TIP_DIR DIRECTORY

NULL_VERIFY_FUNCTION FUNCTION

TB_COMPLEXITY_CHECK FUNCTION

TB_STRING_DISTANCE FUNCTION

SQL> select * from v$instance;

INSTANCE_NUMBER INSTANCE_NAME

--------------- ----------------------------------------

DB_NAME

----------------------------------------

HOST_NAME PARALLEL

--------------------------------------------------------------- --------

THREAD# VERSION

---------- --------

STARTUP_TIME

--------------------------------------------------------------------------------

STATUS SHUTDOWN_PENDING

---------------- ----------------

TIP_FILE

--------------------------------------------------------------------------------

1 tac2

tibero

mysvr YES

1 7

2023/03/14

NORMAL NO

/home/tac/tibero7/config/tac2.tip

1 row selected.

TAC 전체 노드 상태 확인

- cmrctl show all 명령을 통해 tac instance 별 상태를 확인 할수 있다.

$ cmrctl show all

Resource List of Node cm1

=====================================================================

CLUSTER TYPE NAME STATUS DETAIL

----------- -------- -------------- -------- ------------------------

COMMON network net1 UP (private) 10.10.10.10/51210

COMMON network pub1 UP (public) ens33

COMMON cluster cls1 UP inc: net1, pub: pub1

cls1 file cls1:0 UP +0

cls1 file cls1:1 UP +1

cls1 file cls1:2 UP +2

cls1 service tas UP Active Storage, Active Cluster (auto-restart: OFF)

cls1 service tibero UP Database, Active Cluster (auto-restart: OFF)

cls1 as tas1 UP(NRML) tas, /home/tac/tibero7, failed retry cnt: 0

cls1 db tac1 UP(NRML) tibero, /home/tac/tibero7, failed retry cnt: 0

=====================================================================

Resource List of Node cm2

=====================================================================

CLUSTER TYPE NAME STATUS DETAIL

----------- -------- -------------- -------- ------------------------

COMMON network net2 UP (private) 10.10.10.20/51210

COMMON network pub2 UP (public) ens33

COMMON cluster cls1 UP inc: net2, pub: pub2

cls1 file cls1:0 UP +0

cls1 file cls1:1 UP +1

cls1 file cls1:2 UP +2

cls1 service tas UP Active Storage, Active Cluster (auto-restart: OFF)

cls1 service tibero UP Database, Active Cluster (auto-restart: OFF)

cls1 as tas2 UP(NRML) tas, /home/tac/tibero7, failed retry cnt: 0

cls1 db tac2 UP(NRML) tibero, /home/tac/tibero7, failed retry cnt: 0

=====================================================================728x90

반응형