VMware 환경구성

Tibero TAC 를 설치하기위해서 리눅스 서버를 vmware 구성에 대해 먼저 살펴 보겠습니다.

Centos7.9 를 설치후 해당 머신을 복사하여 TAC1 , TAC2 번 노드를 각각 설치 하겠습니다.

VMware Centos7.9 설치는 생략 하도록 하겠습니다.

Vmware 리눅스 환경 구성

Tibero7 을 설치할 리눅스 머신이 준비 되었다면 해당 머신을 복제하여 TAC1 ,TAC2 번 노드로 활용 하도록 합니다.

그리고 공유 디스크로 사용하기위해 별도의 HDD 를 추가 합니다.

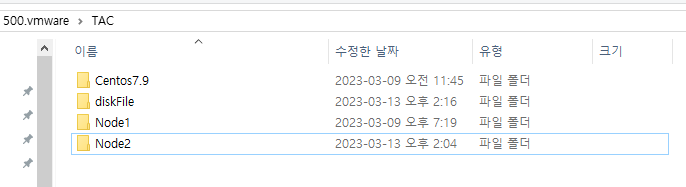

※ OS 디렉토리 위치

- tac1 : D:\500.vmware\TAC\Node1

- tac2 : D:\500.vmware\TAC\Node2

- 공유 디스크 파일 디렉토리 : diskFile

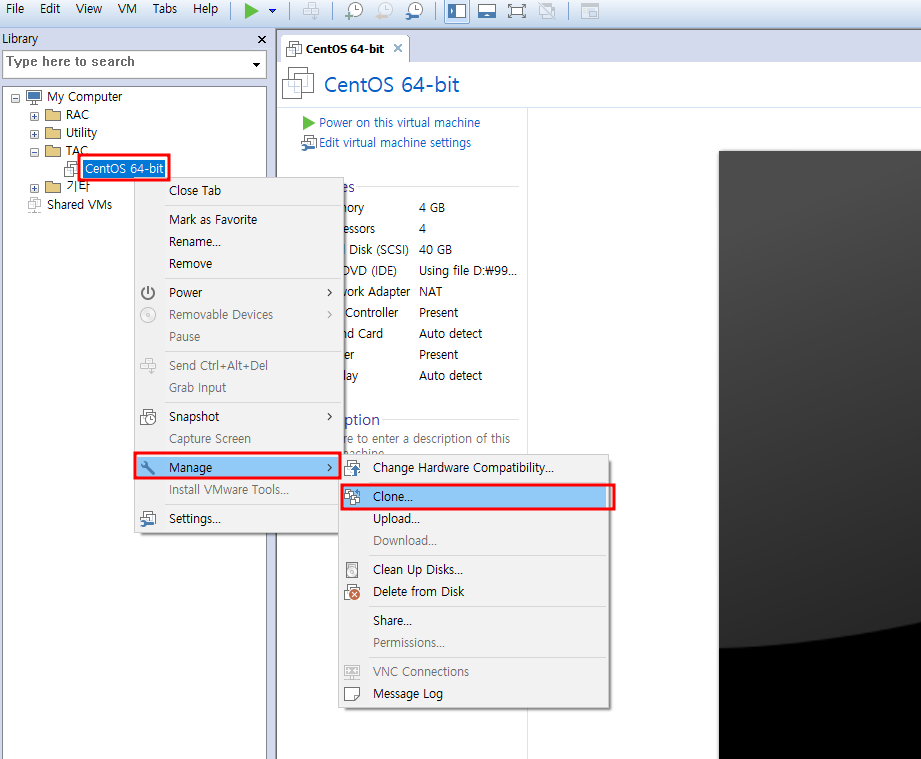

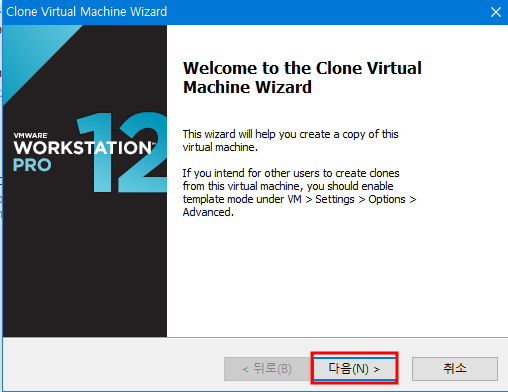

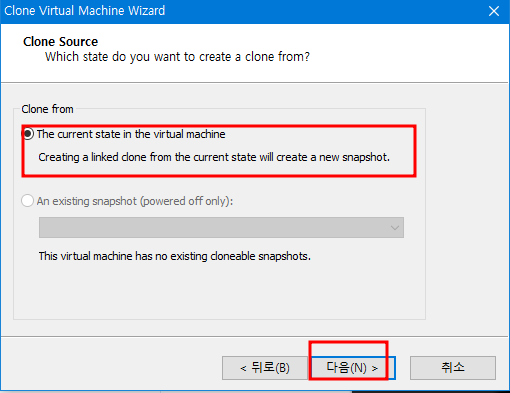

Vmware clone

위 구성에서의 설명 처럼 centos 7.9 설치 이미지를 복사하여 (Clone) 두개의 서버를 신규로 생성 합니다.

|

|

|

|

|

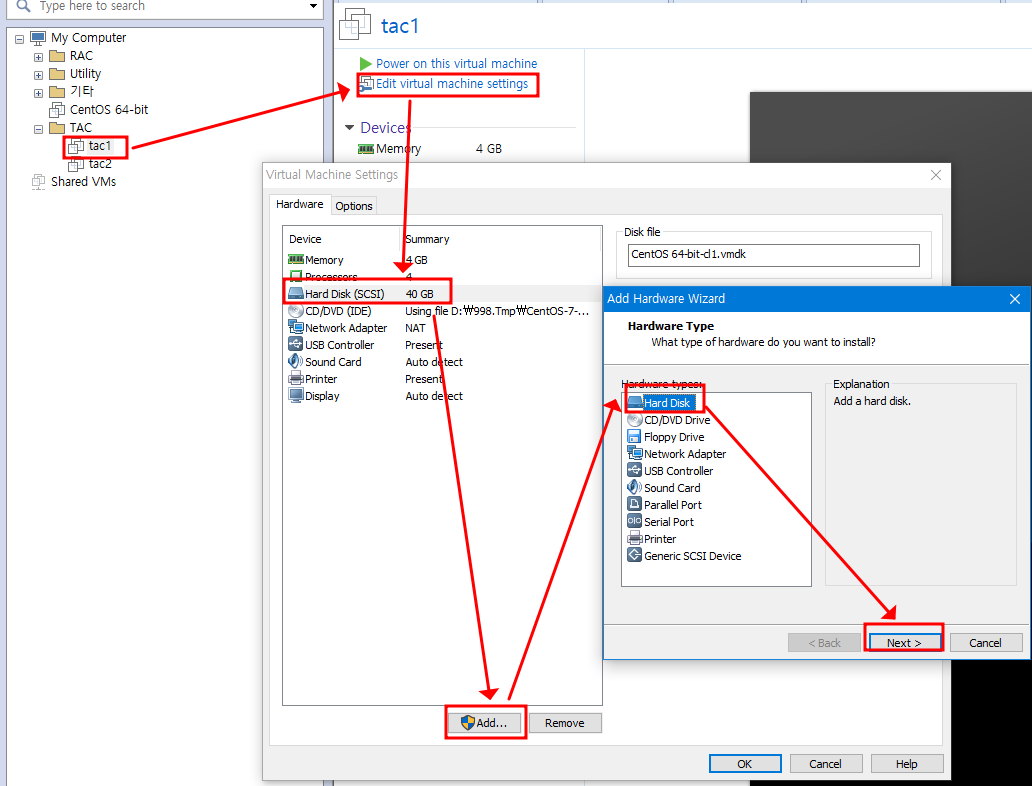

Vmware Hdd Disk 추가

복제한 tac1 machine 에서 하드 디스크(HDD) 를 추가 합니다.

5G HDD 2개를 추가해서 공유 디스크로 사용 하겠습니다.

|

|

|

|

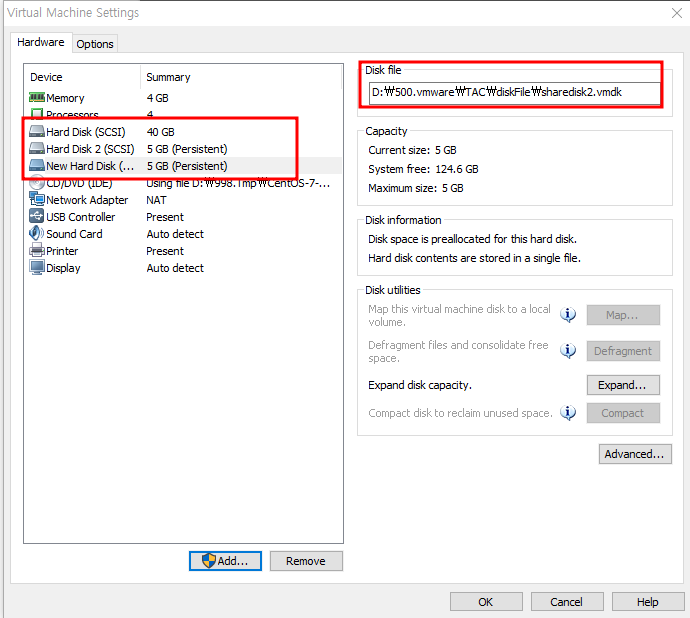

- D:\500.vmware\TAC\diskFile\sharedisk1.vmdk

- diskFile 디렉토리 밑에 sharedisk1.vmdk 라는 이름으로 디스크를 추가 하겠습니다.

|

|

- sharedisk2.vmdk disk 도 동일 한 방식으로 생성을 진행 합니다.

|

|

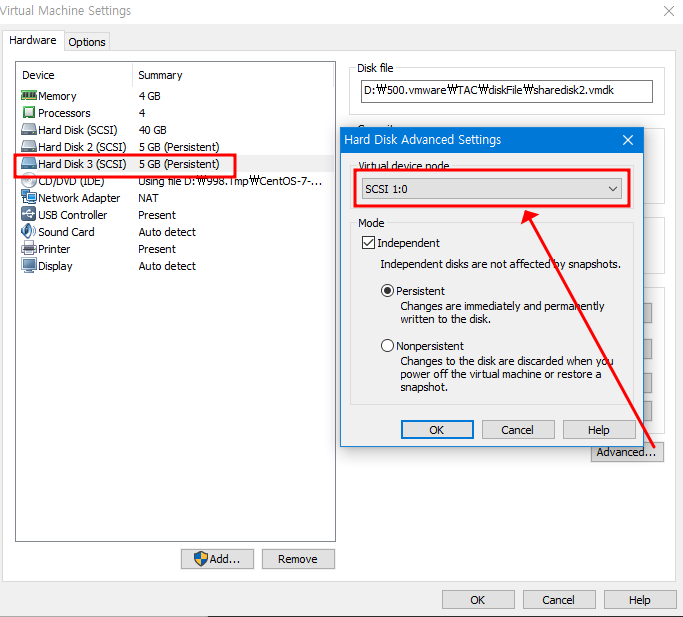

- 정상적으로 disk 가추가되었다면 Virtual device node 를 변경 합니다.

|

|

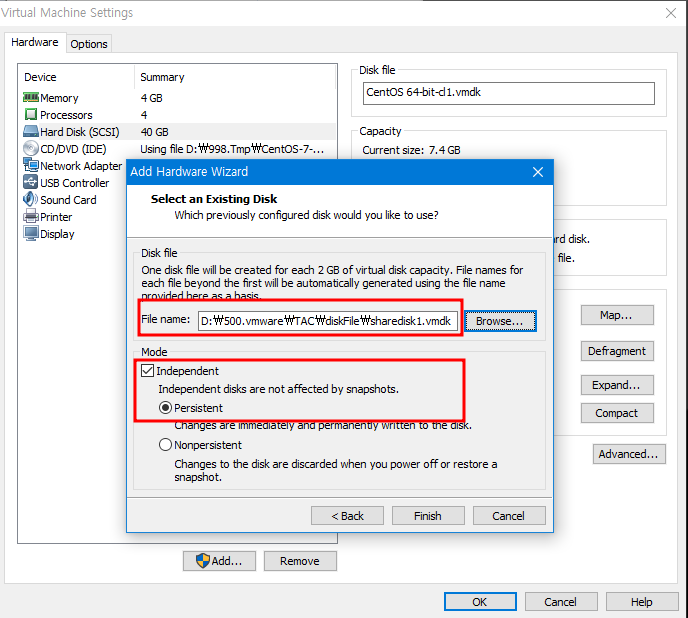

- tac 1 번 machine 에서 추가한 하드디스크를 2번 노드에서도 사용 가능 하도록 tac 2 번 machine 에서 hdd 를 추가 합니다.

- tac2 번 머신 -> Edit virtual machine-> add -> Hard disk -> next ->

- SCSI-> independent -> next ->Use an exsiting virtual disk

|

|

|

|

- 같은 방식으로 2번 디스크를 추가 하면 tac2 번 machine 에서도 tac1 에서 추가한 hdd 가 추가된 것을 확인 할수 있습니다.

|

|

|

공유 디스크 파일 수정

추가된 hard disk 를 각각의 서버에서 공유 하려면 추가적인 작업이 필요합니다.

추가된 디스크 파일의 설정파일을 수정합니다.

tac1.vmx 파일 수정

#edit start

disk.locking = "FALSE"

scsi1.present = "TRUE"

scsi1.virtualDev = "lsilogic"

scsi1.sharedbus = "VIRTUAL"

#edit end

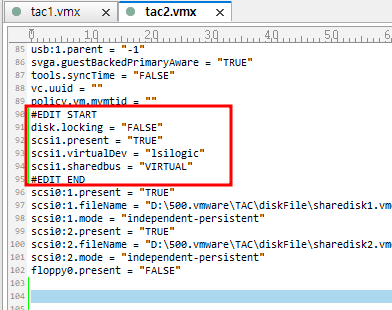

tac2.vmx 파일 수정

#EDIT START

disk.locking = "FALSE"

scsi1.present = "TRUE"

scsi1.virtualDev = "lsilogic"

scsi1.sharedbus = "VIRTUAL"

#EDIT END

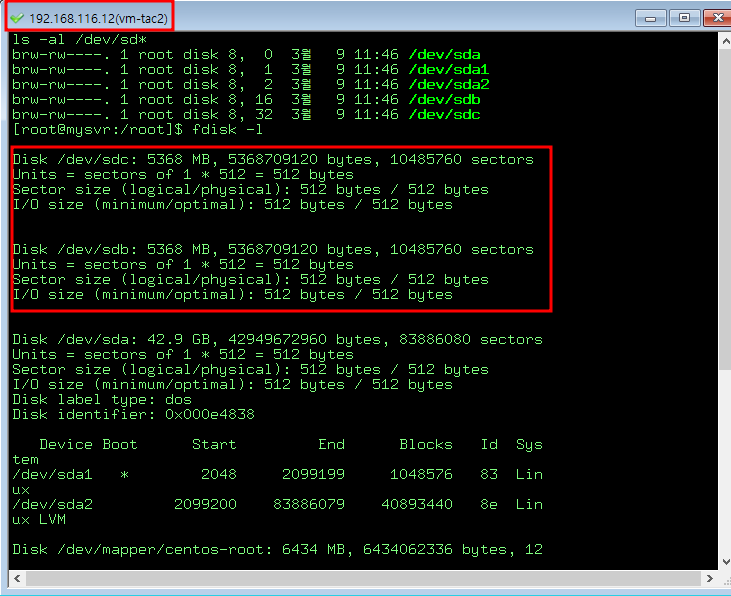

- 정상적으로 공유가 되었는지 확인하기 위해 tac 1,2번 machine 을 기동해서 정보를 확인 하겠습니다.

- 정상적으로 설정이 마무리되었다면 각각의 서버에 접속 하여 ls 명령을 통해 추가된 디스크 정보를 확인할수 있습니다.

- fdisk -l 명령을 통해서도 추가된 디스크 정보를 확인할수 있습니다.

|

|

|

네트워크 추가 설정 (Host-only)

- vmware machine 간 통신(Inter Connect) 을 하기 위한 private network 를 추가 하도록 하겠습니다.

- 각 vm machine 서버 별로 네트워크를 추가 합니다.

- Netwrok Adapter->Add -> Host-only

- 정상적으로 추가 되었다면 서버에 접속 해서 IP를 할당 합니다.

- TAC1 :10.10.10.10

- TAC2 :10.10.10.20

|

|

| tac1 번 노드 | tac2 번노드 |

|

|

RAW Device 생성

추가한 hdd 디스크를 Raw device 로 생성하는 작업을 진행 합니다.

- partition 생성

- Physical Valume 생성

- Valume 그룹생성

- Logical Volume 생성

- Logical volume Raw Device 맵핑

Partition 생성

- fdisk /dev/sdb 명령 수행후 n->p->1->엔터 키 -> 엔터 키-> w 수행

fdisk /dev/sdbWelcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0x96b3f8bb.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1):

First sector (2048-10485759, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-10485759, default 10485759):

Using default value 10485759

Partition 1 of type Linux and of size 5 GiB is set

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

[root@mysvr:/root]$

fdisk /dev/sdc

Welcome to fdisk (util-linux 2.23.2).

Changes will remain in memory only, until you decide to write them.

Be careful before using the write command.

Device does not contain a recognized partition table

Building a new DOS disklabel with disk identifier 0x536414f4.

Command (m for help): n

Partition type:

p primary (0 primary, 0 extended, 4 free)

e extended

Select (default p): p

Partition number (1-4, default 1):

First sector (2048-10485759, default 2048):

Using default value 2048

Last sector, +sectors or +size{K,M,G} (2048-10485759, default 10485759):

Using default value 10485759

Partition 1 of type Linux and of size 5 GiB is set

Command (m for help): w

The partition table has been altered!

Calling ioctl() to re-read partition table.

Syncing disks.

Physical Volume 생성

- 디스크 확인 후 pvcreate 명령을 통해 Physical Volume 을 생성합니다.

brw-rw----. 1 root disk 8, 0 3월 9 11:45 /dev/sda

brw-rw----. 1 root disk 8, 1 3월 9 11:45 /dev/sda1

brw-rw----. 1 root disk 8, 2 3월 9 11:45 /dev/sda2

brw-rw----. 1 root disk 8, 16 3월 9 12:41 /dev/sdb

brw-rw----. 1 root disk 8, 17 3월 9 12:41 /dev/sdb1

brw-rw----. 1 root disk 8, 32 3월 9 12:43 /dev/sdc

brw-rw----. 1 root disk 8, 33 3월 9 12:43 /dev/sdc1

pvcreate /dev/sdb1

pvcreate /dev/sdc1

pvdisplay

pvs

--- Physical volume ---

PV Name /dev/sda2

VG Name centos

PV Size <39.00 GiB / not usable 3.00 MiB

Allocatable yes

PE Size 4.00 MiB

Total PE 9983

Free PE 1

Allocated PE 9982

PV UUID f6dArV-MiVJ-lCgG-CfbY-4PCD-f1d0-uwVkeY

"/dev/sdb1" is a new physical volume of "<5.00 GiB"

--- NEW Physical volume ---

PV Name /dev/sdb1

VG Name

PV Size <5.00 GiB

Allocatable NO

PE Size 0

Total PE 0

Free PE 0

Allocated PE 0

PV UUID aly1AZ-7hzb-T4qM-JAdv-gCWS-Sj2f-5HVDDs

"/dev/sdc1" is a new physical volume of "<5.00 GiB"

--- NEW Physical volume ---

PV Name /dev/sdc1

VG Name

PV Size <5.00 GiB

Allocatable NO

PE Size 0

Total PE 0

Free PE 0

Allocated PE 0

PV UUID Q8sQZR-SBvK-mWRk-8j8o-Mdnp-eWHc-IUFZ2x

볼륨 그룹 생성

- vgcreate 명령을 통해 볼륨 그룹을 생성 합니다.

- vgremove [volume group name] 은 볼륨 그룹 삭제 명령입니다.

vgcreate vg1 /dev/sdb1

vgcreate vg2 /dev/sdc1

vgdisplay

#볼륨그룹삭제

#vgremove vg1 vg2

--- Volume group ---

VG Name vg1

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size <5.00 GiB

PE Size 4.00 MiB

Total PE 1279

Alloc PE / Size 0 / 0

Free PE / Size 1279 / <5.00 GiB

VG UUID 6IWGT6-XHgP-OESg-3Gdh-QOUW-p01L-Cx5INn

--- Volume group ---

VG Name vg2

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 1

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 0

Open LV 0

Max PV 0

Cur PV 1

Act PV 1

VG Size <5.00 GiB

PE Size 4.00 MiB

Total PE 1279

Alloc PE / Size 0 / 0

Free PE / Size 1279 / <5.00 GiB

VG UUID NgC43A-4Cyq-gKHY-8ed7-8UKY-xh8n-p2YJfo

--- Volume group ---

VG Name centos

System ID

Format lvm2

Metadata Areas 1

Metadata Sequence No 4

VG Access read/write

VG Status resizable

MAX LV 0

Cur LV 3

Open LV 3

Max PV 0

Cur PV 1

Act PV 1

VG Size <39.00 GiB

PE Size 4.00 MiB

Total PE 9983

Alloc PE / Size 9982 / 38.99 GiB

Free PE / Size 1 / 4.00 MiB

VG UUID N8yCf2-fqDT-miVi-neoj-A1i8-mFWE-X7dMRv

Logical Volume 생성

- lvcreate -L 100M -n cm_01 vg1

- lvcreate -L [size] -n [logical volume name] [volume group name]

- lvremove /dev/vg1/cm_01 -> 삭제 명령

#volumn Group vg1

lvcreate -L 128M -n vol_128m_01 vg1

lvcreate -L 128M -n vol_128m_02 vg1

lvcreate -L 128M -n vol_128m_03 vg1

lvcreate -L 128M -n vol_128m_04 vg1

lvcreate -L 128M -n vol_128m_05 vg1

lvcreate -L 128M -n vol_128m_06 vg1

lvcreate -L 128M -n vol_128m_07 vg1

lvcreate -L 128M -n vol_128m_08 vg1

lvcreate -L 128M -n vol_128m_09 vg1

lvcreate -L 128M -n vol_128m_10 vg1

lvcreate -L 128M -n vol_128m_11 vg1

lvcreate -L 128M -n vol_128m_12 vg1

lvcreate -L 128M -n vol_128m_13 vg1

lvcreate -L 256M -n vol_256m_14 vg1

lvcreate -L 512M -n vol_512m_15 vg1

lvcreate -L 512M -n vol_512m_16 vg1

lvcreate -L 1024M -n vol_1024m_17 vg1

lvcreate -L 1024M -n vol_1024m_19 vg1

#volumn Group vg2

lvcreate -L 128M -n vol_128m_01 vg2

lvcreate -L 128M -n vol_128m_02 vg2

lvcreate -L 128M -n vol_128m_03 vg2

lvcreate -L 128M -n vol_128m_04 vg2

lvcreate -L 128M -n vol_128m_05 vg2

lvcreate -L 128M -n vol_128m_06 vg2

lvcreate -L 128M -n vol_128m_07 vg2

lvcreate -L 128M -n vol_128m_08 vg2

lvcreate -L 128M -n vol_128m_09 vg2

lvcreate -L 256M -n vol_256m_10 vg2

lvcreate -L 512M -n vol_512m_11 vg2

lvcreate -L 1024M -n vol_1024m_12 vg2

lvcreate -L 2048M -n vol_2048m_13 vg2- logical volume 정보 확인

lvdisplay vg1

lvdisplay vg2

lvs

--- Logical volume ---

LV Path /dev/vg1/contorl_01

LV Name contorl_01

VG Name vg1

LV UUID u8hnpe-DbAE-YgKj-qu5u-cPSI-HN3l-l0kYok

LV Write Access read/write

LV Creation host, time mysvr, 2023-03-09 16:34:02 +0900

LV Status available

# open 0

LV Size 128.00 MiB

Current LE 32

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:4

--- Logical volume ---

LV Path /dev/vg1/redo001

LV Name redo001

VG Name vg1

LV UUID dPbgfi-Uqtp-wObd-eW4e-QDtd-E7rj-ylgWpv

LV Write Access read/write

LV Creation host, time mysvr, 2023-03-09 16:34:02 +0900

LV Status available

# open 0

LV Size 128.00 MiB

Current LE 32

Segments 1

Allocation inherit

Read ahead sectors auto

- currently set to 8192

Block device 253:5

ls -al /dev/vg1 |awk '{print $9}'

lvs

pvs

LV VG Attr LSize Pool Origin Data% Meta% Move Log Cpy%Sync Convert

home centos -wi-ao---- 25.00g

root centos -wi-ao---- 5.99g

swap centos -wi-ao---- 8.00g

vol_1024m_17 vg1 -wi-a----- 1.00g

vol_1024m_19 vg1 -wi-a----- 1.00g

vol_128m_01 vg1 -wi-a----- 128.00m

vol_128m_02 vg1 -wi-a----- 128.00m

vol_128m_03 vg1 -wi-a----- 128.00m

vol_128m_04 vg1 -wi-a----- 128.00m

vol_128m_05 vg1 -wi-a----- 128.00m

vol_128m_06 vg1 -wi-a----- 128.00m

vol_128m_07 vg1 -wi-a----- 128.00m

vol_128m_08 vg1 -wi-a----- 128.00m

vol_128m_09 vg1 -wi-a----- 128.00m

vol_128m_10 vg1 -wi-a----- 128.00m

vol_128m_11 vg1 -wi-a----- 128.00m

vol_128m_12 vg1 -wi-a----- 128.00m

vol_128m_13 vg1 -wi-a----- 128.00m

vol_256m_14 vg1 -wi-a----- 256.00m

vol_512m_15 vg1 -wi-a----- 512.00m

vol_512m_16 vg1 -wi-a----- 512.00m

vol_1024m_12 vg2 -wi-a----- 1.00g

vol_128m_01 vg2 -wi-a----- 128.00m

vol_128m_02 vg2 -wi-a----- 128.00m

vol_128m_03 vg2 -wi-a----- 128.00m

vol_128m_04 vg2 -wi-a----- 128.00m

vol_128m_05 vg2 -wi-a----- 128.00m

vol_128m_06 vg2 -wi-a----- 128.00m

vol_128m_07 vg2 -wi-a----- 128.00m

vol_128m_08 vg2 -wi-a----- 128.00m

vol_128m_09 vg2 -wi-a----- 128.00m

vol_2048m_13 vg2 -wi-a----- 2.00g

vol_256m_10 vg2 -wi-a----- 256.00m

vol_512m_11 vg2 -wi-a----- 512.00m

[root@mysvr:/root]$ pvs

PV VG Fmt Attr PSize PFree

/dev/sda2 centos lvm2 a-- <39.00g 4.00m

/dev/sdb1 vg1 lvm2 a-- <5.00g 124.00m

/dev/sdc1 vg2 lvm2 a-- <5.00g 124.00m

RAW Device 맵핑

logical volume 까지 정상적으로 생성되었다면 이제 마지막 작업으로

RAW Device 와 생성한 volume 을 맵핑하는 작업을 진행 하겠습니다.

파일은 /etc/udev/rules.d/70-persistent-ipoib.rules 파일 정보 수정하여 raw device 를 생성 할수 있습니다.

- /etc/udev/rules.d/70-persistent-ipoib.rules

ACTION!="add|change" , GOTO="raw_end"

#ENV{DM_VG_NAME}=="[volum group name ]", ENV{DM_LV_NAME}=="[logical volumn name ]",RUN+="/usr/bin/raw /dev/raw/raw1 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_01",RUN+="/usr/bin/raw /dev/raw/raw1 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_02",RUN+="/usr/bin/raw /dev/raw/raw2 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_03",RUN+="/usr/bin/raw /dev/raw/raw3 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_04",RUN+="/usr/bin/raw /dev/raw/raw4 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_05",RUN+="/usr/bin/raw /dev/raw/raw5 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_06",RUN+="/usr/bin/raw /dev/raw/raw6 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_07",RUN+="/usr/bin/raw /dev/raw/raw7 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_08",RUN+="/usr/bin/raw /dev/raw/raw8 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_09",RUN+="/usr/bin/raw /dev/raw/raw9 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_10",RUN+="/usr/bin/raw /dev/raw/raw10 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_11",RUN+="/usr/bin/raw /dev/raw/raw11 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_12",RUN+="/usr/bin/raw /dev/raw/raw12 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_128m_13",RUN+="/usr/bin/raw /dev/raw/raw13 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_256m_14",RUN+="/usr/bin/raw /dev/raw/raw14 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_512m_15",RUN+="/usr/bin/raw /dev/raw/raw15 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_512m_16",RUN+="/usr/bin/raw /dev/raw/raw16 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_1024m_17",RUN+="/usr/bin/raw /dev/raw/raw17 %N"

ENV{DM_VG_NAME}=="vg1", ENV{DM_LV_NAME}=="vol_1024m_19",RUN+="/usr/bin/raw /dev/raw/raw19 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_128m_01",RUN+="/usr/bin/raw /dev/raw/raw20 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_128m_02",RUN+="/usr/bin/raw /dev/raw/raw21 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_128m_03",RUN+="/usr/bin/raw /dev/raw/raw22 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_128m_04",RUN+="/usr/bin/raw /dev/raw/raw23 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_128m_05",RUN+="/usr/bin/raw /dev/raw/raw24 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_128m_06",RUN+="/usr/bin/raw /dev/raw/raw25 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_128m_07",RUN+="/usr/bin/raw /dev/raw/raw26 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_128m_08",RUN+="/usr/bin/raw /dev/raw/raw27 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_128m_09",RUN+="/usr/bin/raw /dev/raw/raw28 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_256m_10",RUN+="/usr/bin/raw /dev/raw/raw29 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_512m_11",RUN+="/usr/bin/raw /dev/raw/raw30 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_1024m_12",RUN+="/usr/bin/raw /dev/raw/raw31 %N"

ENV{DM_VG_NAME}=="vg2", ENV{DM_LV_NAME}=="vol_2048m_13",RUN+="/usr/bin/raw /dev/raw/raw32 %N"

KERNEL=="raw*",OWNER="tac",GROUP="tibero",MODE="0660"

LABEL="raw_end"

RAW Device 등록 및 상태 확인

udevadm 명령을 통해 raw device 정보를 추가 하고 갱신 할수 있습니다.

- 변경사항 reload : udevadm control --reload-rules

- 추가: udevadm trigger --action=add

- 정보 확인: raw -qa or ls -al /dev/raw*

udevadm control --reload-rules

udevadm trigger --action=add

raw -qa

ls -al /dev/raw*[root@mysvr:/root]$ raw -qa

/dev/raw/raw1: bound to major 253, minor 3

/dev/raw/raw2: bound to major 253, minor 4

/dev/raw/raw3: bound to major 253, minor 5

/dev/raw/raw4: bound to major 253, minor 6

/dev/raw/raw5: bound to major 253, minor 7

/dev/raw/raw6: bound to major 253, minor 8

/dev/raw/raw7: bound to major 253, minor 9

/dev/raw/raw8: bound to major 253, minor 10

/dev/raw/raw9: bound to major 253, minor 11

/dev/raw/raw10: bound to major 253, minor 12

/dev/raw/raw11: bound to major 253, minor 13

/dev/raw/raw12: bound to major 253, minor 14

/dev/raw/raw13: bound to major 253, minor 15

/dev/raw/raw14: bound to major 253, minor 16

/dev/raw/raw15: bound to major 253, minor 17

/dev/raw/raw16: bound to major 253, minor 18

/dev/raw/raw17: bound to major 253, minor 19

/dev/raw/raw18: bound to major 253, minor 20

/dev/raw/raw19: bound to major 253, minor 25

/dev/raw/raw20: bound to major 253, minor 26

/dev/raw/raw21: bound to major 253, minor 27

/dev/raw/raw22: bound to major 253, minor 28

/dev/raw/raw23: bound to major 253, minor 29

/dev/raw/raw24: bound to major 253, minor 30

/dev/raw/raw25: bound to major 253, minor 31

/dev/raw/raw26: bound to major 253, minor 32

/dev/raw/raw27: bound to major 253, minor 33

/dev/raw/raw28: bound to major 253, minor 34

/dev/raw/raw29: bound to major 253, minor 35

/dev/raw/raw30: bound to major 253, minor 36

/dev/raw/raw31: bound to major 253, minor 37

/dev/raw/raw32: bound to major 253, minor 38

/dev/raw/raw33: bound to major 253, minor 39

/dev/raw/raw34: bound to major 253, minor 40

리눅스 VM 재 부팅

- 정상적으로 적용이 되었는지 확인을 위해 각 각 서버를 재기동 합니다.

- 순서는 1번 tac1 machine 부팅 -> 2 번 tac2 machine 부팅 순으로 진행을 합니다.

- 동시에 부팅 시킬 경우 정상적으로 리눅스가 부팅이 되지 않을 수 있다.

Tibero TAC 설치

이제 리눅스 머신의 서버의 설정이 끝났다면 티베로 설치 작업을 진행 하도록 하겠습니다.

방화벽 해제

systemctl stop firewalld

systemctl disable firewalld

[root@mysvr:/home/tac]$ systemctl disable firewalld

Removed symlink /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed symlink /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

Tibero 계정 생성

groupadd tibero

adduser -d /home/tac -g tibero tac

커널 파라미터 수정 및 적용

vi /etc/sysctl.conf

sysctl -pkernel.shmmax = 4092966912

kernel.shmmni = 4096

kernel.shmall = 4000000000

kernel.sem = 10000 32000 10000 10000

kernel.sysrq = 1

kernel.core_uses_pid = 1

kernel.msgmnb = 65536

kernel.msgmax = 65536

kernel.msgmni = 2048

net.ipv4.tcp_syncookies = 1

net.ipv4.ip_forward = 1

net.ipv4.conf.default.accept_source_route = 0

net.ipv4.tcp_tw_recycle = 1

net.ipv4.tcp_max_syn_backlog = 4096

net.ipv4.conf.all.arp_filter = 1

net.ipv4.ip_local_port_range = 9000 65535

net.core.netdev_max_backlog = 10000

net.core.rmem_max = 4194304

net.core.wmem_max = 4194304

vm.overcommit_memory = 0

fs.file-max = 6815744바이너리 준비 ( Tibero7 & Licnese.xml 파일)

- 바이너리는 준비 작업은 생략 하겠습니다.

Tibero profile 및 파일 설정 (환경설정)

| tac1( server node1) -192.168.116.11 | tac2( server node2) -192.168.116.12 | |

| tac1.profile vs tac2.profile |

export TB_HOME=/home/tac/tibero7 export CM_HOME=/home/tac/tibero7 export TB_SID=tac1 export CM_SID=cm1 export PATH=.:$TB_HOME/bin:$TB_HOME/client/bin:$CM_HOME/scripts:$PATH export LD_LIBRARY_PATH=.:$TB_HOME/lib:$TB_HOME/client/lib:/lib:/usr/lib:/usr/local/lib:/usr/lib/threads export LD_LIBRARY_PATH_64=$TB_HOME/lib:$TB_HOME/client/lib:/usr/lib64:/usr/lib/64:/usr/ucblib:/usr/local/lib export LIBPATH=$LD_LIBRARY_PATH export LANG=ko_KR.eucKR export LC_ALL=ko_KR.eucKR export LC_CTYPE=ko_KR.eucKR export LC_NUMERIC=ko_KR.eucKR export LC_TIME=ko_KR.eucKR export LC_COLLATE=ko_KR.eucKR export LC_MONETARY=ko_KR.eucKR export LC_MESSAGES=ko_KR.eucKR |

export TB_HOME=/home/tac/tibero7 export CM_HOME=/home/tac/tibero7 export TB_SID=tac2 export CM_SID=cm2 export PATH=.:$TB_HOME/bin:$TB_HOME/client/bin:$CM_HOME/scripts:$PATH export LD_LIBRARY_PATH=.:$TB_HOME/lib:$TB_HOME/client/lib:/lib:/usr/lib:/usr/local/lib:/usr/lib/threads export LD_LIBRARY_PATH_64=$TB_HOME/lib:$TB_HOME/client/lib:/usr/lib64:/usr/lib/64:/usr/ucblib:/usr/local/lib export LIBPATH=$LD_LIBRARY_PATH export LANG=ko_KR.eucKR export LC_ALL=ko_KR.eucKR export LC_CTYPE=ko_KR.eucKR export LC_NUMERIC=ko_KR.eucKR export LC_TIME=ko_KR.eucKR export LC_COLLATE=ko_KR.eucKR export LC_MONETARY=ko_KR.eucKR export LC_MESSAGES=ko_KR.eucKR |

| tac1.tip vs tac2.tip |

DB_NAME=tibero LISTENER_PORT=21000 CONTROL_FILES="/dev/raw/raw2","/dev/raw/raw21" DB_CREATE_FILE_DEST=/home/tac/tbdata/ LOG_ARCHIVE_DEST=/home/tac/tbdata/archive DBWR_CNT=1 DBMS_LOG_TOTAL_SIZE_LIMIT=300M MAX_SESSION_COUNT=30 TOTAL_SHM_SIZE=1G MEMORY_TARGET=2G DB_BLOCK_SIZE=8K DB_CACHE_SIZE=300M CLUSTER_DATABASE=Y THREAD=0 UNDO_TABLESPACE=UNDO0 ############### CM ####################### CM_PORT=61040 _CM_LOCAL_ADDR=192.168.116.11 LOCAL_CLUSTER_ADDR=10.10.10.10 LOCAL_CLUSTER_PORT=61050 ########################################### |

DB_NAME=tibero LISTENER_PORT=21000 CONTROL_FILES="/dev/raw/raw2","/dev/raw/raw21" DB_CREATE_FILE_DEST=/home/tac/tbdata/ LOG_ARCHIVE_DEST=/home/tac/tbdata/archive DBWR_CNT=1 DBMS_LOG_TOTAL_SIZE_LIMIT=300M MAX_SESSION_COUNT=30 TOTAL_SHM_SIZE=2G MEMORY_TARGET=3G DB_BLOCK_SIZE=8K DB_CACHE_SIZE=300M CLUSTER_DATABASE=Y THREAD=1 UNDO_TABLESPACE=UNDO1 ################ CM ####################### CM_PORT=61040 _CM_LOCAL_ADDR=192.168.116.12 LOCAL_CLUSTER_ADDR=10.10.10.20 LOCAL_CLUSTER_PORT=61050 ########################################### |

| cm1.tip vs cm2.tip |

#cluster 구성 시, node의 ID로 사용될 값 CM_NAME=cm1 #cmctl 명령 사용 시, cm에 접속하기 위해 필요한 포트 정보 CM_UI_PORT=61040 CM_HEARTBEAT_EXPIRE=450 CM_WATCHDOG_EXPIRE=400 LOG_LVL_CM=5 CM_RESOURCE_FILE=/home/tac/cm_resource/cmfile CM_RESOURCE_FILE_BACKUP=/home/tac/cm_resource/cmfile_backup CM_RESOURCE_FILE_BACKUP_INTERVAL=1 CM_LOG_DEST=/home/tac/tibero7/instance/tac1/log/cm CM_GUARD_LOG_DEST=/home/tac/tibero7/instance/tac1/log/cm_guard CM_FENCE=Y CM_ENABLE_FAST_NET_ERROR_DETECTION=Y _CM_CHECK_RUNLEVEL=Y |

#cluster 구성 시, node의 ID로 사용될 값 CM_NAME=cm2 #cmctl 명령 사용 시, cm에 접속하기 위해 필요한 포트 정보 CM_UI_PORT=61040 CM_HEARTBEAT_EXPIRE=450 CM_WATCHDOG_EXPIRE=400 LOG_LVL_CM=5 CM_RESOURCE_FILE=/home/tac/cm_resource/cmfile CM_RESOURCE_FILE_BACKUP=/home/tac/cm_resource/cmfile_backup CM_RESOURCE_FILE_BACKUP_INTERVAL=1 CM_LOG_DEST=/home/tac/tibero7/instance/tac2/log/cm CM_GUARD_LOG_DEST=/home/tac/tibero7/instance/tac2/log/cm_guard CM_FENCE=Y CM_ENABLE_FAST_NET_ERROR_DETECTION=Y _CM_CHECK_RUNLEVEL=Y |

| tbdsn.tbr | tibero=( (INSTANCE=(HOST=192.168.116.11) (PORT=21000) (DB_NAME=tibero) ) (INSTANCE=(HOST=192.168.116.12) (PORT=21000) (DB_NAME=tibero) ) (LOAD_BALANCE=Y) (USE_FAILOVER=Y) ) tac1=( (INSTANCE=(HOST=192.168.116.11) (PORT=21000) (DB_NAME=tibero) ) ) tac2=( (INSTANCE=(HOST=192.168.116.12) (PORT=21000) (DB_NAME=tibero) ) ) |

tibero=( (INSTANCE=(HOST=192.168.116.11) (PORT=21000) (DB_NAME=tibero) ) (INSTANCE=(HOST=192.168.116.12) (PORT=21000) (DB_NAME=tibero) ) (LOAD_BALANCE=Y) (USE_FAILOVER=Y) ) tac1=( (INSTANCE=(HOST=192.168.116.11) (PORT=21000) (DB_NAME=tibero) ) ) tac2=( (INSTANCE=(HOST=192.168.116.12) (PORT=21000) (DB_NAME=tibero) ) ) |

TAC 설치

- tac 설정 관련 파일을 tibero7 각 디렉토리로 Copy 합니다.

#TAC1 번 노드

cp tac1.tip $TB_HOME/config/tac1.tip

cp cm1.tip $TB_HOME/config/cm1.tip

cp tbdsn.tbr $TB_HOME/client/config/tbdsn.tbr

cp license.xml $TB_HOME/license/license.xml

#TAC2 번 노드

cp tac2.tip $TB_HOME/config/tac2.tip

cp cm2.tip $TB_HOME/config/cm2.tip

cp tbdsn.tbr $TB_HOME/client/config/tbdsn.tbr

cp license.xml $TB_HOME/license/license.xml

TAC1 번 노드 수행 작업

CM (Tibero Cluster Manager) 네트워크 리소스 등록

#TAC1

tbcm -b

cmrctl add network --nettype private --ipaddr 10.10.10.10 --portno 51210 --name net1

cmrctl show

cmrctl add network --nettype public --ifname ens33 --name pub1

cmrctl show

cmrctl add cluster --incnet net1 --pubnet pub1 --cfile "/dev/raw/raw1" --name cls1

cmrctl start cluster --name cls1

cmrctl show######################### WARNING #########################

# You are trying to start the CM-fence function. #

###########################################################

You are not 'root'. Proceed anyway without fence? (y/N)y

CM Guard daemon started up.

CM-fence enabled.

Tibero cluster manager (cm1) startup failed!

[tac@mysvr:/home/tac]$ cmrctl add network --nettype private --ipaddr 10.10.10.10 --portno 51210 --name net1

Resource add success! (network, net1)

[tac@mysvr:/home/tac]$ cmrctl add network --nettype public --ifname ens33 --name pub1

Resource add success! (network, pub1)

[tac@mysvr:/home/tac]$ cmrctl add cluster --incnet net1 --pubnet pub1 --cfile "/dev/raw/raw1" --name cls1

Resource add success! (cluster, cls1)

[tac@mysvr:/home/tac]$ cmrctl start cluster --name cls1

MSG SENDING SUCCESS!

[tac@mysvr:/home/tac]$ cmrctl show

Resource List of Node cm1

=====================================================================

CLUSTER TYPE NAME STATUS DETAIL

----------- -------- -------------- -------- ------------------------

COMMON network net1 UP (private) 10.10.10.10/51210

COMMON network pub1 UP (public) ens33

COMMON cluster cls1 UP inc: net1, pub: pub1

cls1 file cls1:0 UP /dev/raw/raw1

=====================================================================

CM Tibero DB Cluster Service 등록

#TAC Cluster

#serivce name = DB_NAME (tibero)

cmrctl add service --name tibero --cname cls1 --type db --mode AC

cmrctl show

cmrctl add db --name tac1 --svcname tibero --dbhome $CM_HOME

cmrctl show

Resource add success! (service, tibero)

[tac@mysvr:/home/tac]$ cmrctl add db --name tac1 --svcname tibero --dbhome $CM_HOME

Resource add success! (db, tac1)

[tac@mysvr:/home/tac]$ cmrctl show

Resource List of Node cm1

=====================================================================

CLUSTER TYPE NAME STATUS DETAIL

----------- -------- -------------- -------- ------------------------

COMMON network net1 UP (private) 10.10.10.10/51210

COMMON network pub1 UP (public) ens33

COMMON cluster cls1 UP inc: net1, pub: pub1

cls1 file cls1:0 UP /dev/raw/raw1

cls1 service tibero DOWN Database, Active Cluster (auto-restart: OFF)

cls1 db tac1 DOWN tibero, /home/tac/tibero7, failed retry cnt: 0

=====================================================================

Database Create 생성

- tac1 번 instance nomount 부팅 및 상태 확인

cmrctl start db --name tac1 --option "-t nomount"

BOOT SUCCESS! (MODE : NOMOUNT)

[tac@mysvr:/home/tac]$ cmrctl show

Resource List of Node cm1

=====================================================================

CLUSTER TYPE NAME STATUS DETAIL

----------- -------- -------------- -------- ------------------------

COMMON network net1 UP (private) 10.10.10.10/51210

COMMON network pub1 UP (public) ens33

COMMON cluster cls1 UP inc: net1, pub: pub1

cls1 file cls1:0 UP /dev/raw/raw1

cls1 service tibero UP Database, Active Cluster (auto-restart: OFF)

cls1 db tac1 UP(NMNT) tibero, /home/tac/tibero7, failed retry cnt: 0

=====================================================================

[tac@mysvr:/home/tac]$

- tac1 번 instance 접속후 데이터베이스 create

- tbsql sys/tibero@tac1

CREATE DATABASE "tibero"

USER sys IDENTIFIED BY tibero

MAXINSTANCES 8

MAXDATAFILES 256

CHARACTER set MSWIN949

NATIONAL character set UTF16

LOGFILE GROUP 0 ('/dev/raw/raw3','/dev/raw/raw22') SIZE 127M,

GROUP 1 ('/dev/raw/raw4','/dev/raw/raw23') SIZE 127M,

GROUP 2 ('/dev/raw/raw5','/dev/raw/raw24') SIZE 127M

MAXLOGFILES 100

MAXLOGMEMBERS 8

ARCHIVELOG

DATAFILE '/dev/raw/raw10' SIZE 127M

AUTOEXTEND off

DEFAULT TABLESPACE USR

DATAFILE '/dev/raw/raw11' SIZE 127M

AUTOEXTEND off

DEFAULT TEMPORARY TABLESPACE TEMP

TEMPFILE '/dev/raw/raw12' SIZE 127M

AUTOEXTEND off

UNDO TABLESPACE UNDO0

DATAFILE '/dev/raw/raw9' SIZE 127M

AUTOEXTEND off ;

tbSQL 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Connected to Tibero using tac1.

SQL> CREATE DATABASE "tibero"

2 USER sys IDENTIFIED BY tibero

3 MAXINSTANCES 8

4 MAXDATAFILES 256

5 CHARACTER set MSWIN949

6 NATIONAL character set UTF16

7 LOGFILE GROUP 0 ('/dev/raw/raw3','/dev/raw/raw22') SIZE 127M,

8 GROUP 1 ('/dev/raw/raw4','/dev/raw/raw23') SIZE 127M,

9 GROUP 2 ('/dev/raw/raw5','/dev/raw/raw24') SIZE 127M

10 MAXLOGFILES 100

11 MAXLOGMEMBERS 8

12 ARCHIVELOG

13 DATAFILE '/dev/raw/raw10' SIZE 127M

14 AUTOEXTEND off

15 DEFAULT TABLESPACE USR

16 DATAFILE '/dev/raw/raw11' SIZE 127M

17 AUTOEXTEND off

18 DEFAULT TEMPORARY TABLESPACE TEMP

19 TEMPFILE '/dev/raw/raw12' SIZE 127M

20 AUTOEXTEND off

21 UNDO TABLESPACE UNDO0

22 DATAFILE '/dev/raw/raw9' SIZE 127M

23 AUTOEXTEND off ;

Database created.

SQL> q

TAC2 번 노드 의 redo 및 undo 생성 및 thread 활성화

- tac1 번 노드 Normal 모드로 재 부팅 부팅 후 undo 및 redo 생성후 쓰레드1 활성화

CREATE UNDO TABLESPACE UNDO1 DATAFILE '/dev/raw/raw28' SIZE 127M AUTOEXTEND off;

create tablespace syssub datafile '/dev/raw/raw12' SIZE 127M AUTOEXTEND off;

ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 3 ('/dev/raw/raw6','/dev/raw/raw25') size 127M;

ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 4 ('/dev/raw/raw7','/dev/raw/raw26') size 127M;

ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 5 ('/dev/raw/raw8','/dev/raw/raw27') size 127M;

ALTER DATABASE ENABLE PUBLIC THREAD 1;Listener port = 21000

Tibero 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Tibero instance started up (NORMAL mode).

[tac@mysvr:/home/tac]$ tbsql sys/tibero@tac1

tbSQL 7

TmaxTibero Corporation Copyright (c) 2020-. All rights reserved.

Connected to Tibero using tac1.

SQL> CREATE UNDO TABLESPACE UNDO1 DATAFILE '/dev/raw/raw28' SIZE 127M AUTOEXTEND off;

Tablespace 'UNDO1' created.

SQL> create tablespace syssub datafile '/dev/raw/raw13' SIZE 127M autoextend on next 8M EXTENT MANAGEMENT LOCAL AUTOALLOCATE;

Tablespace 'SYSSUB' created.

SQL> ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 3 ('/dev/raw/raw6','/dev/raw/raw25') size 127M;

Database altered.

SQL> ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 4 ('/dev/raw/raw7','/dev/raw/raw26') size 127M;

Database altered.

SQL> ALTER DATABASE ADD LOGFILE THREAD 1 GROUP 5 ('/dev/raw/raw8','/dev/raw/raw27') size 127M;

Database altered.

SQL> ALTER DATABASE ENABLE PUBLIC THREAD 1;

Database altered.

SQL>

system.sh 수행

$TB_HOME/scripts/system.sh -p1 tibero -p2 syscat -a1 y -a2 y -a3 y -a4 yCreating additional system index...

Dropping agent table...

Creating client policy table ...

Creating text packages table ...

Creating the role DBA...

Creating system users & roles...

Creating example users...

..........................

Creating sql translator profiles ...

Creating agent table...

Creating additional static views using dpv...

Done.

For details, check /home/tac/tibero7/instance/tac1/log/system_init.log.

TAC 2번 노드 수행 작업

- 2번째 노드의 vmware 에 접속하여 일련의 작업을 수행한다.

CM (Tibero Cluster Manager) 맵버십 등록

tbcm -b

cmrctl add network --nettype private --ipaddr 10.10.10.20 --portno 51210 --name net2

cmrctl show

cmrctl add network --nettype public --ifname ens33 --name pub2

cmrctl show

cmrctl add cluster --incnet net2 --pubnet pub2 --cfile "/dev/raw/raw1" --name cls1

cmrctl show

cmrctl start cluster --name cls1

cmrctl showTAC 2번 instance 의 DB Cluster Service 등록

cmrctl add db --name tac2 --svcname tibero --dbhome $CM_HOMETAC 2번 instance 접속 및 상태 확인

- cmrctl show all

'05.DB > Tibero' 카테고리의 다른 글

| Tibero RODBC 연동 방법 (1) | 2023.03.02 |

|---|---|

| Tibero JDBC Clob 조회 예제 (0) | 2022.07.18 |

| [TDP.net] Tibero TDP.net 테스트 방법 (0) | 2022.04.28 |

| [Tibero-Django] Django Tibero 연동 방법 (0) | 2022.04.15 |

| [Tibero]Tibero ODBC 연동 (0) | 2022.04.11 |